Java Cache Tutorial with Cache Dependency Injection (CDI)

From Resin 4.0 Wiki

| (9 intermediate revisions by one user not shown) | |||

| Line 11: | Line 11: | ||

This example defines a single local, persistent cache named "my-cache", defined in the WEB-INF/resin-web.xml (this part is Resin Application Server specific, of course.) Once the cache is defined, the standard jcache javax.cache.Cache object can be injected into your class with the standard CDI @Inject annotation and used. | This example defines a single local, persistent cache named "my-cache", defined in the WEB-INF/resin-web.xml (this part is Resin Application Server specific, of course.) Once the cache is defined, the standard jcache javax.cache.Cache object can be injected into your class with the standard CDI @Inject annotation and used. | ||

| + | |||

| + | For a more complete discussion of caching see [[Introduction: Java Cache]]. | ||

| + | |||

| + | == Defining ClusterCache in XML configuration == | ||

The definition selected the Resin ClusterCache implementation (you can also use a LocalCache for a single-server cache), gives it a javax.inject.Named name of "my-cache" and configures it. Here we only configure the name, and set the expire time to 1H. (The default is infinite expire.) | The definition selected the Resin ClusterCache implementation (you can also use a LocalCache for a single-server cache), gives it a javax.inject.Named name of "my-cache" and configures it. Here we only configure the name, and set the expire time to 1H. (The default is infinite expire.) | ||

| − | <web-app xmlns="http://caucho.com/ns/resin" | + | ''WEB-INF/resin-web.xml defining ClusterCache'' |

| + | <web-app xmlns="<nowiki>http://caucho.com/ns/resin</nowiki>" | ||

xmlns:ee="urn:java:ee" | xmlns:ee="urn:java:ee" | ||

xmlns:resin="urn:java:com.caucho.resin"> | xmlns:resin="urn:java:com.caucho.resin"> | ||

| Line 20: | Line 25: | ||

<resin:ClusterCache ee:Named="my-cache"> | <resin:ClusterCache ee:Named="my-cache"> | ||

<name>my-cache</name> | <name>my-cache</name> | ||

| − | <modified-expire-timeout> | + | <modified-expire-timeout>1h</modified-expire-timeout> |

</resin:ClusterCache> | </resin:ClusterCache> | ||

| Line 31: | Line 36: | ||

The Cache object can be used somewhat like a java.util.Map. Here we just use the get() and put() methods. Because we set the modified-expire-timeout to be 1H, the get() will return null an hour after the data was populated. | The Cache object can be used somewhat like a java.util.Map. Here we just use the get() and put() methods. Because we set the modified-expire-timeout to be 1H, the get() will return null an hour after the data was populated. | ||

| + | ''org/example/MyService.java injecting the Cache'' | ||

import javax.inject.Inject; | import javax.inject.Inject; | ||

import javax.cache.Cache; | import javax.cache.Cache; | ||

| Line 58: | Line 64: | ||

Notice that all the code uses standard APIs without any need to reference Resin classes. Since only the configuration needs to select the Resin cache, your application can easily switch cache implementations. | Notice that all the code uses standard APIs without any need to reference Resin classes. Since only the configuration needs to select the Resin cache, your application can easily switch cache implementations. | ||

| + | |||

| + | You can compare this technique with using the @CacheResult annotation described in [[Java Cache Tutorial with Method Annotations (CDI)]]. | ||

== Cache Performance example == | == Cache Performance example == | ||

| + | |||

| + | [[Image:ideal-cache.png|right]] | ||

Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple | Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple | ||

test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any | test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any | ||

real cache use will have a longer "doLongCalculation" than this simple example, and therefore the cache will benefit even more. | real cache use will have a longer "doLongCalculation" than this simple example, and therefore the cache will benefit even more. | ||

| + | |||

| + | <em>For a more complete discussion of caching see [[Introduction:_Java_Cache]].</em> | ||

The micro-benchmark has a simple jdbc query in the "doLongCalculation" method | The micro-benchmark has a simple jdbc query in the "doLongCalculation" method | ||

| Line 101: | Line 113: | ||

in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance. | in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance. | ||

| − | == The | + | For a more complete discussion of cache performance see [[Introduction: Java Cache]]. |

| + | |||

| + | = Cache Consistency (Updating the Cache) = | ||

| + | |||

| + | When your application modifies the data in the database, you will update the Cache as well. If you consistently update the cache each time you | ||

| + | update or delete an entry, you can improve the cache performance and consistency; your data will not be out of date. Using this pattern, your | ||

| + | cache become more of a true storage, rather than a dated snapshot of the data. | ||

| + | |||

| + | ''org/example/MyService.java updating the Cache for consistency'' | ||

| + | |||

| + | public class MyService { | ||

| + | @Inject @Named("my-cache") | ||

| + | private Cache<String,String> _cache; | ||

| + | |||

| + | public String getData(String key) | ||

| + | { | ||

| + | String cachedValue = _cache.get(key); | ||

| + | |||

| + | if (cachedValue == null) { | ||

| + | cachedValue = getFromDatabase(key); | ||

| + | |||

| + | _cache.put(key, cachedValue); | ||

| + | } | ||

| + | |||

| + | return cachedValue; | ||

| + | } | ||

| + | |||

| + | public void putData(String key, String value) | ||

| + | { | ||

| + | _cache.put(key, value); | ||

| + | |||

| + | saveIntoDatabase(key, value); | ||

| + | } | ||

| + | |||

| + | public void removeData(String key) | ||

| + | { | ||

| + | _cache.remove(key); | ||

| + | |||

| + | removeFromDatabase(key); | ||

| + | } | ||

| + | |||

| + | public void clearCache() | ||

| + | { | ||

| + | _cache.removeAll(); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | The Cache.removeAll() method is typically used when the database is modified outside of the application, for example if you modified | ||

| + | the database directly with SQL. | ||

| + | |||

| + | For the annotation-based configuration, you can use @CacheResult, @CachePut, @CacheRemoveEntry, and @CacheRemoveAll. | ||

| + | |||

| + | |||

| + | = Cache Considerations = | ||

| + | |||

| + | * <b>size</b>: If your data set is large, the cache size can limit how much of your data can fit, resulting in a high miss rate and poor performance. A cache that can page memory out to disk, like | ||

| + | Resin's cache, can allow for large cache sized only limited by your disk space, not your JVM heap space. | ||

| + | |||

| + | * <b>persistence</b>: Memory-only caches like memcached are cleared when a server restarts, forcing cache misses until the cache is refilled. With a persistent cache like Resin's cache, a restart loads the most recent data, saving your databases from a big performance spike. | ||

| + | |||

| + | * <b>sharding</b>: With a heavily loaded cache, sharding the cache can increase the cache size and performance, by splitting the cache among multiple cache servers. With sharding, exactly one cache | ||

| + | server "owns" a cache entry, reducing conflicts. Resin's cache automatically shards the cache across the three triad hub servers. When Resin's cache has its own cluster, the cache can be sharded across all | ||

| + | the servers in that cache. | ||

| + | |||

| + | * <b>consistency</b>: If your cache has multiple "owner" servers, for example in a non-sharded cache, a simultaneous update to two servers can cause a conflict, similar to a synchronization issue. A sharded architecture reduces this problem by allowing only one server to own the entry. | ||

| − | + | * <b>near/local caches</b>: When you have a small number of frequently used and rarely changing objects, a cache can give better performance with an internal local or near cache. Since the shard will | |

| + | still be the primary owner, it's possible for the local cache to be slightly out-of-date by a configurable time. Writes are always written through to the primary owner. | ||

Latest revision as of 20:07, 10 July 2013

Faster application performance is possible with Java caching by saving the results of long calculations and reducing database load. The Java caching API is being standardized with jcache. In combination with Java Dependency Injection (CDI), you can use caching in a completely standard fashion in the Resin Application Server. You'll typically want

to look at caching when your application starts slowing down, or your database or other expensive resource starts getting overloaded. Caching is

useful when you want to:

- Improve latency

- Reduce database load

- Reduce CPU use

This example defines a single local, persistent cache named "my-cache", defined in the WEB-INF/resin-web.xml (this part is Resin Application Server specific, of course.) Once the cache is defined, the standard jcache javax.cache.Cache object can be injected into your class with the standard CDI @Inject annotation and used.

For a more complete discussion of caching see Introduction: Java Cache.

Contents |

[edit] Defining ClusterCache in XML configuration

The definition selected the Resin ClusterCache implementation (you can also use a LocalCache for a single-server cache), gives it a javax.inject.Named name of "my-cache" and configures it. Here we only configure the name, and set the expire time to 1H. (The default is infinite expire.)

WEB-INF/resin-web.xml defining ClusterCache

<web-app xmlns="http://caucho.com/ns/resin"

xmlns:ee="urn:java:ee"

xmlns:resin="urn:java:com.caucho.resin">

<resin:ClusterCache ee:Named="my-cache">

<name>my-cache</name>

<modified-expire-timeout>1h</modified-expire-timeout>

</resin:ClusterCache>

</web-app>

[edit] CDI inject of javax.cache.Cache

In the MyService class, we inject the cache that we defined using the CDI @Inject and @Named annotations. Normally, CDI recommends that you create custom qualifier annotations instead of using @Named, but to keep the example simple, we're giving it a simple name.

The Cache object can be used somewhat like a java.util.Map. Here we just use the get() and put() methods. Because we set the modified-expire-timeout to be 1H, the get() will return null an hour after the data was populated.

org/example/MyService.java injecting the Cache

import javax.inject.Inject;

import javax.cache.Cache;

public class MyService {

@Inject @Named("my-cache")

private Cache<String,String> _cache;

public String doStuff(String key)

{

String cachedValue = _cache.get(key);

if (cachedValue == null) {

cachedValue = doLongCalculation(key);

_cache.put(key, cachedValue);

}

return cachedValue;

}

public String doLongCalculation(String key)

{

// database, REST, XML-parsing, etc.

}

}

Notice that all the code uses standard APIs without any need to reference Resin classes. Since only the configuration needs to select the Resin cache, your application can easily switch cache implementations.

You can compare this technique with using the @CacheResult annotation described in Java Cache Tutorial with Method Annotations (CDI).

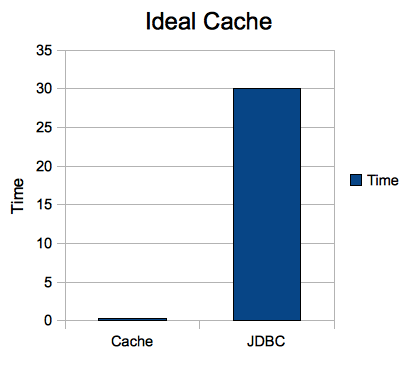

[edit] Cache Performance example

Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any real cache use will have a longer "doLongCalculation" than this simple example, and therefore the cache will benefit even more.

For a more complete discussion of caching see Introduction:_Java_Cache.

The micro-benchmark has a simple jdbc query in the "doLongCalculation" method

"SELECT value FROM test WHERE id=?"

and then to get useful data, the call to "doStuff" is repeated 300k times and compared with the direct call to "doLongCalculation" 300k times.

| Type | Time | requests per millisecond | Mysql CPU |

|---|---|---|---|

| JDBC | 30s | 10.0 req/ms | 35% |

| Cache | 0.3s | 1095 req/ms | 0% |

Even this simple test shows how caches can win. In this simple benchmark, the performance is significantly faster and saves the database load.

- 10x faster

- Remove Mysql load

To get more realistic numbers, you'll need to benchmark the difference on a full application. Micro-benchmarks like this are useful to explain concepts, but real benchmarks require testing against your own application, in combination with profiling. For example, Resin's simple profiling capabilities in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance.

For a more complete discussion of cache performance see Introduction: Java Cache.

[edit] Cache Consistency (Updating the Cache)

When your application modifies the data in the database, you will update the Cache as well. If you consistently update the cache each time you update or delete an entry, you can improve the cache performance and consistency; your data will not be out of date. Using this pattern, your cache become more of a true storage, rather than a dated snapshot of the data.

org/example/MyService.java updating the Cache for consistency

public class MyService {

@Inject @Named("my-cache")

private Cache<String,String> _cache;

public String getData(String key)

{

String cachedValue = _cache.get(key);

if (cachedValue == null) {

cachedValue = getFromDatabase(key);

_cache.put(key, cachedValue);

}

return cachedValue;

}

public void putData(String key, String value)

{

_cache.put(key, value);

saveIntoDatabase(key, value);

}

public void removeData(String key)

{

_cache.remove(key);

removeFromDatabase(key);

}

public void clearCache()

{

_cache.removeAll();

}

}

The Cache.removeAll() method is typically used when the database is modified outside of the application, for example if you modified the database directly with SQL.

For the annotation-based configuration, you can use @CacheResult, @CachePut, @CacheRemoveEntry, and @CacheRemoveAll.

[edit] Cache Considerations

- size: If your data set is large, the cache size can limit how much of your data can fit, resulting in a high miss rate and poor performance. A cache that can page memory out to disk, like

Resin's cache, can allow for large cache sized only limited by your disk space, not your JVM heap space.

- persistence: Memory-only caches like memcached are cleared when a server restarts, forcing cache misses until the cache is refilled. With a persistent cache like Resin's cache, a restart loads the most recent data, saving your databases from a big performance spike.

- sharding: With a heavily loaded cache, sharding the cache can increase the cache size and performance, by splitting the cache among multiple cache servers. With sharding, exactly one cache

server "owns" a cache entry, reducing conflicts. Resin's cache automatically shards the cache across the three triad hub servers. When Resin's cache has its own cluster, the cache can be sharded across all the servers in that cache.

- consistency: If your cache has multiple "owner" servers, for example in a non-sharded cache, a simultaneous update to two servers can cause a conflict, similar to a synchronization issue. A sharded architecture reduces this problem by allowing only one server to own the entry.

- near/local caches: When you have a small number of frequently used and rarely changing objects, a cache can give better performance with an internal local or near cache. Since the shard will

still be the primary owner, it's possible for the local cache to be slightly out-of-date by a configurable time. Writes are always written through to the primary owner.