NginX 1.2.0 versus Resin 4.0.29 performance tests

From Resin 4.0 Wiki

(Created page with "We have recently run some performance benchmarks comparing Resin 4.0.29 versus NginX 1.2.0. ==Benchmark tools== We used to tools to perform the benchmarks. * httperf * Aut...") |

|||

| (8 intermediate revisions by one user not shown) | |||

| Line 1: | Line 1: | ||

| + | Recently, we decided to spend some extra time improving Resin's performance and scalability. Since | ||

| + | we like challenges, we set a goal of meeting or beating nginx, a fast C-based web server. When working on performance, we use benchmarks to see which proposed changes improve Resin's performance, and which | ||

| + | proposed changes do not. The autobench/httperf benchmark is particularly interesting because it simulates high load rates and exposes scalability issues better than some other benchmarks like ab. After completing the Resin performance work, it turned out that we exceeded our goal and were actually able to beat nginx, and thought we'd share our results. | ||

| + | |||

We have recently run some performance benchmarks comparing Resin 4.0.29 versus NginX 1.2.0. | We have recently run some performance benchmarks comparing Resin 4.0.29 versus NginX 1.2.0. | ||

| + | These benchmarks show that Resin Pro matches or exceeds NginX's throughput. | ||

| + | |||

| + | We tested 0k (empty HTML page), 1K, 8K and 64K byte files. At every level Resin matched or exceeded NginX performance. | ||

==Benchmark tools== | ==Benchmark tools== | ||

| − | + | The benchmark tests used the following tools: | |

* httperf | * httperf | ||

* AutoBench | * AutoBench | ||

| + | |||

| + | ===httperf=== | ||

[http://www.hpl.hp.com/research/linux/httperf/ httperf] is tool produced by HP for measuring web server performance. The httperf tool supports HTTP/1.1 keepalives and SSL protocols. | [http://www.hpl.hp.com/research/linux/httperf/ httperf] is tool produced by HP for measuring web server performance. The httperf tool supports HTTP/1.1 keepalives and SSL protocols. | ||

| + | ===AutoBench=== | ||

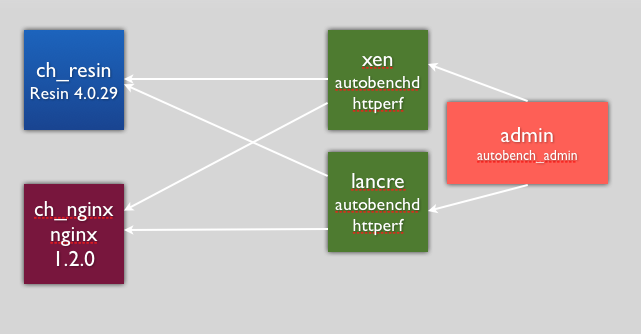

[http://www.xenoclast.org/autobench/ Autobench] is a tool for automating the process of performing a comparative benchmark test against two a web servers. '''Autobench''' uses '''httperf'''. Autobench runs httperf against each host. AutoBench increases the number of requests per seconds on each iteration. AutoBench delivers output in a format that can be easily consumed by spreadsheet tools. AutoBench has a mode where it can drive multiple clients against a set of servers to minimize the possibility of testing your client throughput instead of server throughput. The command '''autobenchd''' is used to run a | [http://www.xenoclast.org/autobench/ Autobench] is a tool for automating the process of performing a comparative benchmark test against two a web servers. '''Autobench''' uses '''httperf'''. Autobench runs httperf against each host. AutoBench increases the number of requests per seconds on each iteration. AutoBench delivers output in a format that can be easily consumed by spreadsheet tools. AutoBench has a mode where it can drive multiple clients against a set of servers to minimize the possibility of testing your client throughput instead of server throughput. The command '''autobenchd''' is used to run a | ||

daemon on client machines. The '''autobench_admin''' command drives many clients to run test at same time by communicating with '''autobenchd'''. | daemon on client machines. The '''autobench_admin''' command drives many clients to run test at same time by communicating with '''autobenchd'''. | ||

| + | |||

| + | ====Setup Overview==== | ||

| + | |||

| + | [[Image:setup_benchmark_diagram.png]] | ||

| + | |||

| + | ===Configuration=== | ||

| + | The only change that was made was the '''worker_processes''' were set to 8 for NginX to improve throughput. | ||

| + | |||

| + | ===Hardware Software Specifications=== | ||

| + | |||

| + | |||

| + | ====Client HW/OS specs:==== | ||

| + | * i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM. | ||

| + | * Ubuntu 12 / Linux Kernel 3.2.0-26-generic | ||

| + | |||

| + | ====Server HW specs:==== | ||

| + | * i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM. | ||

| + | * Ubuntu 12 / Linux Kernel 3.2.0-26-generic | ||

| + | |||

| + | ====Test software:==== | ||

| + | * Autobench 2.1.1 | ||

| + | * httperf 0.9.0 | ||

| + | |||

| + | ====Software under test:==== | ||

| + | * Resin Pro 4.0.29 | ||

| + | * nginx 1.2.0 | ||

| + | |||

| + | ==0k test== | ||

| + | |||

| + | We want to make sure Resin can handle as many concurrent connections as possible without glitches or blocking. The tiny 0k file is a good test for high concurrency, because it spends less time on network overhead and more time stressing the threading and internal locks. Because we used an 8-core machine, we can be certain that we're avoiding unnecessary locks or timing problems. | ||

| + | |||

| + | For most sites, the small file stress test simulates heavy ajax use, and small file use. As sites become more interactive, this small file test becomes ever more important. | ||

| + | |||

| + | As a comparison of a threaded Java web server with an event-based C web server, the 0k test is a good test of the threading and event manager dispatch. Because most of the time in the test is establishing a connection or switching between requests at the very highest rate, both the threading and the event management get a tough work-out. | ||

| + | |||

| + | ===Command Line Arguments=== | ||

| + | |||

| + | ====0k.sh==== | ||

| + | <pre> | ||

| + | ./admin.sh 300000 2000 20000 1000 0k | ||

| + | </pre> | ||

| + | |||

| + | ====admin.sh==== | ||

| + | <pre> | ||

| + | autobench_admin | ||

| + | --clients xen:4600,lancre:4600 | ||

| + | --uri1 /file_$5.html | ||

| + | --host1 ch_resin --port1 8080 | ||

| + | --uri2 /file_$5.html | ||

| + | --host2 ch_nginx --port2 80 | ||

| + | --num_conn $1 | ||

| + | --num_call 10 | ||

| + | --low_rate $2 | ||

| + | --high_rate $3 | ||

| + | --rate_step $4 | ||

| + | --timeout 3 | ||

| + | --file out_con$1_start$2_end$3_step$4_$5.tsv | ||

| + | </pre> | ||

| + | |||

| + | Above is used to setup 300,000 connections at a rate of 20,000 to 200,000 requests per second. | ||

| + | Each iteration increases the rate by 10,000 from 20,000 to 200,000. | ||

| + | |||

| + | ===0k html file file_0k.html=== | ||

| + | <pre> | ||

| + | <html> | ||

| + | <body> | ||

| + | <pre></pre> | ||

| + | </body> | ||

| + | </html> | ||

| + | </pre> | ||

| + | |||

| + | === 0k full Results for 0K test === | ||

| + | |||

| + | [[Image:Resin_nginx_0k_full2.png]] | ||

| + | |||

| + | [[Image:Resin_nginx_response_time.png]] | ||

| + | |||

| + | [[Image:Nginx_resin_0k_IO_throughput.png]] | ||

| + | |||

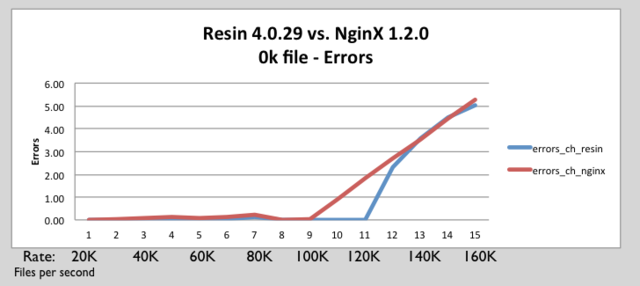

| + | [[Image:Nginx_resin_errors_0k.png]] | ||

| + | |||

| + | |||

| + | ==1K test== | ||

| + | |||

| + | ===Command line=== | ||

| + | |||

| + | ====1k.sh==== | ||

| + | <pre> | ||

| + | ./admin.sh 200000 1000 10000 250 1k | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | ====admin.sh==== | ||

| + | <pre> | ||

| + | autobench_admin | ||

| + | --clients xen.caucho.com:4600,lancre.caucho.com:4600 | ||

| + | --uri1 /file_$5.html | ||

| + | --host1 ch_resin --port1 8080 | ||

| + | --uri2 /file_$5.html | ||

| + | --host2 ch_nginx --port2 80 | ||

| + | --num_conn $1 | ||

| + | --num_call 10 | ||

| + | --low_rate $2 | ||

| + | --high_rate $3 | ||

| + | --rate_step $4 | ||

| + | --timeout 3 | ||

| + | --file out_con$1_start$2_end$3_step$4_$5.tsv | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | ====1k.html==== | ||

| + | <pre> | ||

| + | <html> | ||

| + | <body> | ||

| + | <pre> | ||

| + | 0 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 1 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 2 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 3 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 4 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 5 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 6 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 7 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 8 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 9 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | </pre> | ||

| + | </body> | ||

| + | </html> | ||

| + | </pre> | ||

| + | |||

| + | === 1k full Results for 1K test === | ||

| + | |||

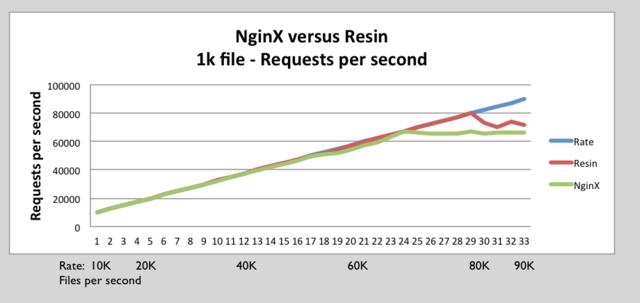

| + | [[Image:Resin_nginx_1k_requests_per_second.png]] | ||

| + | |||

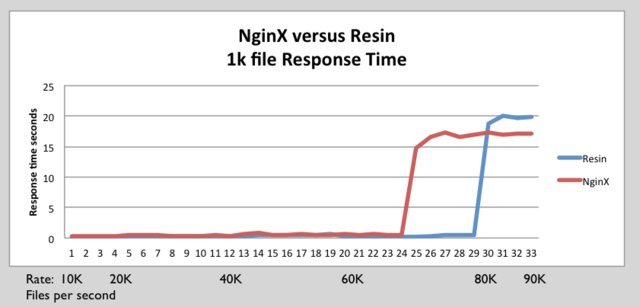

| + | [[Image:Resin_nginx_1k_response_time.png]] | ||

| + | |||

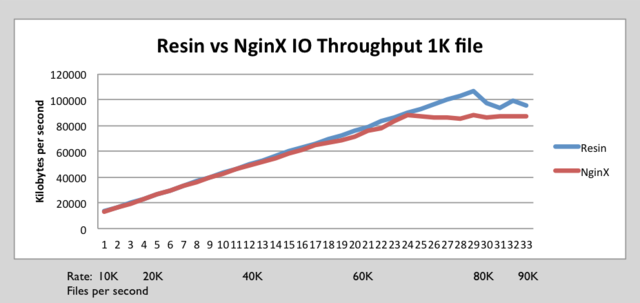

| + | [[Image:Resin_nginx_IO_1k.png]] | ||

| + | |||

| + | [[Image:Resin_nginx_1k_errors.png]] | ||

| + | |||

| + | === 64 K test results === | ||

| + | |||

| + | |||

| + | |||

| + | ==== Result ==== | ||

| + | PUT IMAGES HERE | ||

| + | |||

| + | ====64k.sh==== | ||

| + | <pre> | ||

| + | ./admin.sh 10 50 1500 50 64k | ||

| + | </pre> | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | ====admin.sh==== | ||

| + | <pre> | ||

| + | autobench_admin | ||

| + | --clients xen.caucho.com:4600,lancre.caucho.com:4600 | ||

| + | --uri1 /file_$5.html | ||

| + | --host1 ch_resin --port1 8080 | ||

| + | --uri2 /file_$5.html | ||

| + | --host2 ch_nginx --port2 80 | ||

| + | --num_conn $1 | ||

| + | --num_call 10 | ||

| + | --low_rate $2 | ||

| + | --high_rate $3 | ||

| + | --rate_step $4 | ||

| + | --timeout 3 | ||

| + | --file out_con$1_start$2_end$3_step$4_$5.tsv | ||

| + | </pre> | ||

| + | |||

| + | ==== 64 test file ==== | ||

| + | |||

| + | <pre> | ||

| + | <html> | ||

| + | <body> | ||

| + | <pre> | ||

| + | 0 | ||

| + | 0 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 1 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 2 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 3 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 4 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 5 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 6 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 7 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 8 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | 9 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 | ||

| + | |||

| + | |||

| + | [Repeats 63 more times, there are 0-7 blocks repeated 0-7 times] | ||

| + | |||

| + | </pre> | ||

| + | </body> | ||

| + | </html> | ||

| + | </pre> | ||

Latest revision as of 00:00, 14 September 2012

Recently, we decided to spend some extra time improving Resin's performance and scalability. Since we like challenges, we set a goal of meeting or beating nginx, a fast C-based web server. When working on performance, we use benchmarks to see which proposed changes improve Resin's performance, and which proposed changes do not. The autobench/httperf benchmark is particularly interesting because it simulates high load rates and exposes scalability issues better than some other benchmarks like ab. After completing the Resin performance work, it turned out that we exceeded our goal and were actually able to beat nginx, and thought we'd share our results.

We have recently run some performance benchmarks comparing Resin 4.0.29 versus NginX 1.2.0. These benchmarks show that Resin Pro matches or exceeds NginX's throughput.

We tested 0k (empty HTML page), 1K, 8K and 64K byte files. At every level Resin matched or exceeded NginX performance.

Contents |

[edit] Benchmark tools

The benchmark tests used the following tools:

- httperf

- AutoBench

[edit] httperf

httperf is tool produced by HP for measuring web server performance. The httperf tool supports HTTP/1.1 keepalives and SSL protocols.

[edit] AutoBench

Autobench is a tool for automating the process of performing a comparative benchmark test against two a web servers. Autobench uses httperf. Autobench runs httperf against each host. AutoBench increases the number of requests per seconds on each iteration. AutoBench delivers output in a format that can be easily consumed by spreadsheet tools. AutoBench has a mode where it can drive multiple clients against a set of servers to minimize the possibility of testing your client throughput instead of server throughput. The command autobenchd is used to run a daemon on client machines. The autobench_admin command drives many clients to run test at same time by communicating with autobenchd.

[edit] Setup Overview

[edit] Configuration

The only change that was made was the worker_processes were set to 8 for NginX to improve throughput.

[edit] Hardware Software Specifications

[edit] Client HW/OS specs:

- i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM.

- Ubuntu 12 / Linux Kernel 3.2.0-26-generic

[edit] Server HW specs:

- i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM.

- Ubuntu 12 / Linux Kernel 3.2.0-26-generic

[edit] Test software:

- Autobench 2.1.1

- httperf 0.9.0

[edit] Software under test:

- Resin Pro 4.0.29

- nginx 1.2.0

[edit] 0k test

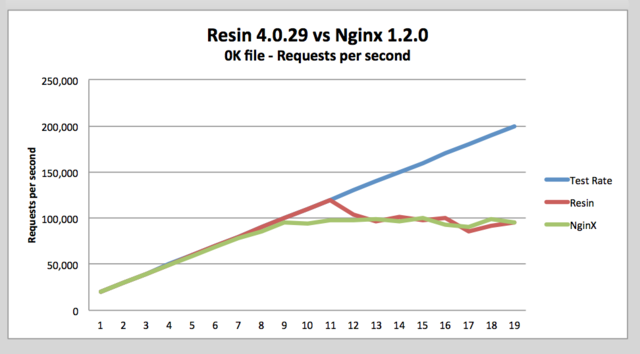

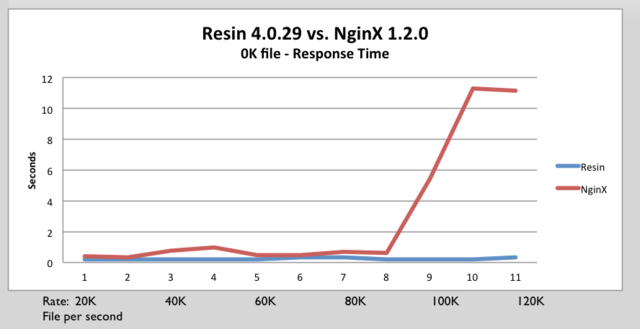

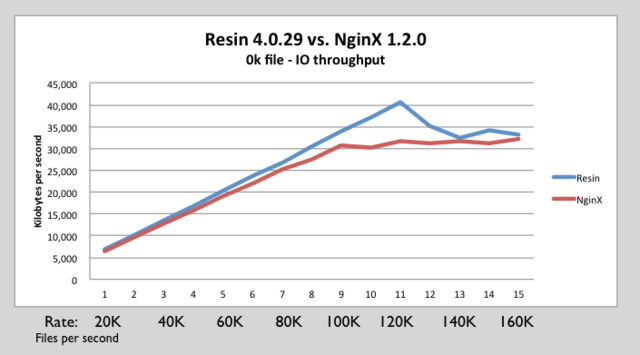

We want to make sure Resin can handle as many concurrent connections as possible without glitches or blocking. The tiny 0k file is a good test for high concurrency, because it spends less time on network overhead and more time stressing the threading and internal locks. Because we used an 8-core machine, we can be certain that we're avoiding unnecessary locks or timing problems.

For most sites, the small file stress test simulates heavy ajax use, and small file use. As sites become more interactive, this small file test becomes ever more important.

As a comparison of a threaded Java web server with an event-based C web server, the 0k test is a good test of the threading and event manager dispatch. Because most of the time in the test is establishing a connection or switching between requests at the very highest rate, both the threading and the event management get a tough work-out.

[edit] Command Line Arguments

[edit] 0k.sh

./admin.sh 300000 2000 20000 1000 0k

[edit] admin.sh

autobench_admin --clients xen:4600,lancre:4600 --uri1 /file_$5.html --host1 ch_resin --port1 8080 --uri2 /file_$5.html --host2 ch_nginx --port2 80 --num_conn $1 --num_call 10 --low_rate $2 --high_rate $3 --rate_step $4 --timeout 3 --file out_con$1_start$2_end$3_step$4_$5.tsv

Above is used to setup 300,000 connections at a rate of 20,000 to 200,000 requests per second. Each iteration increases the rate by 10,000 from 20,000 to 200,000.

[edit] 0k html file file_0k.html

<html> <body> <pre></pre> </body> </html>

[edit] 0k full Results for 0K test

[edit] 1K test

[edit] Command line

[edit] 1k.sh

./admin.sh 200000 1000 10000 250 1k

[edit] admin.sh

autobench_admin --clients xen.caucho.com:4600,lancre.caucho.com:4600 --uri1 /file_$5.html --host1 ch_resin --port1 8080 --uri2 /file_$5.html --host2 ch_nginx --port2 80 --num_conn $1 --num_call 10 --low_rate $2 --high_rate $3 --rate_step $4 --timeout 3 --file out_con$1_start$2_end$3_step$4_$5.tsv

[edit] 1k.html

<html> <body> <pre> 0 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 1 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 2 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 3 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 4 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 5 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 6 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 7 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 8 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 9 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 </pre> </body> </html>

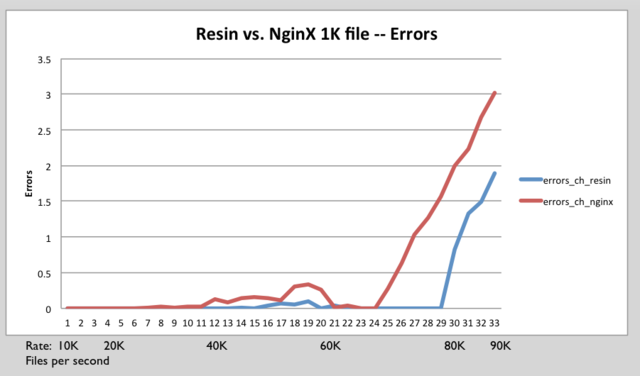

[edit] 1k full Results for 1K test

[edit] 64 K test results

[edit] Result

PUT IMAGES HERE

[edit] 64k.sh

./admin.sh 10 50 1500 50 64k

====admin.sh==== <pre> autobench_admin --clients xen.caucho.com:4600,lancre.caucho.com:4600 --uri1 /file_$5.html --host1 ch_resin --port1 8080 --uri2 /file_$5.html --host2 ch_nginx --port2 80 --num_conn $1 --num_call 10 --low_rate $2 --high_rate $3 --rate_step $4 --timeout 3 --file out_con$1_start$2_end$3_step$4_$5.tsv

[edit] 64 test file

<html> <body> <pre> 0 0 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 1 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 2 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 3 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 4 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 5 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 6 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 7 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 8 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 9 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 [Repeats 63 more times, there are 0-7 blocks repeated 0-7 times] </pre> </body> </html>