NginX 1.2.0 versus Resin 4.0.29 performance tests

From Resin 4.0 Wiki

| Line 21: | Line 21: | ||

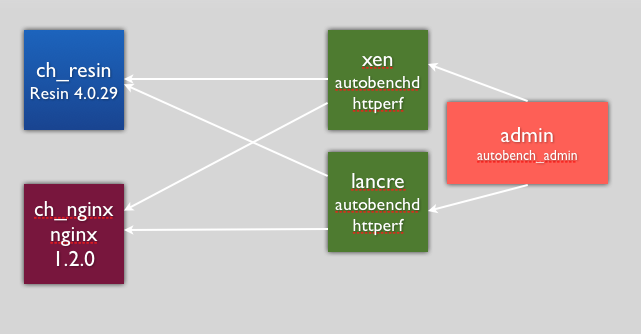

====Setup Overview==== | ====Setup Overview==== | ||

| − | + | [[Image:setup_benchmark_diagram.png]] | |

===Configuration=== | ===Configuration=== | ||

Revision as of 00:00, 14 August 2012

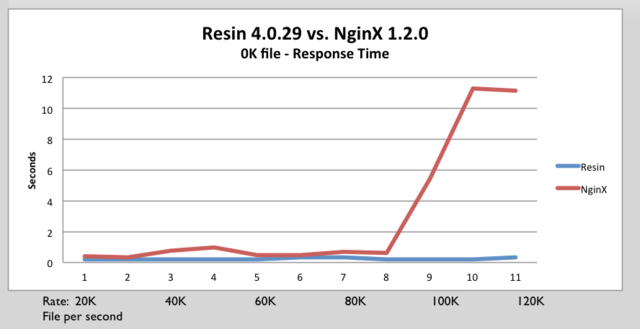

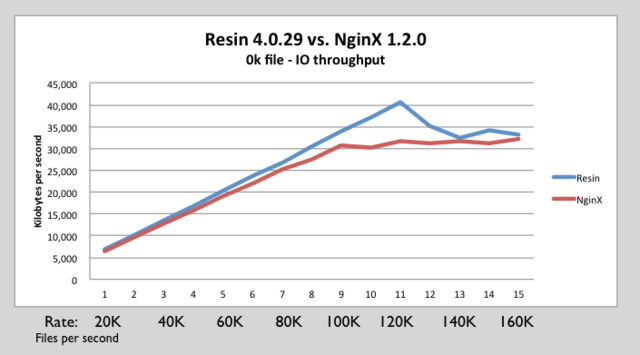

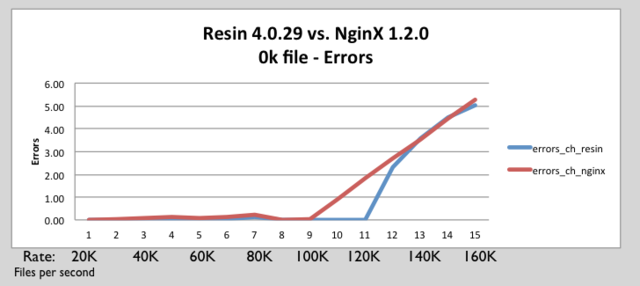

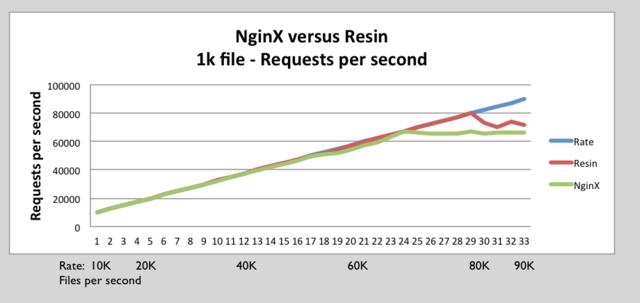

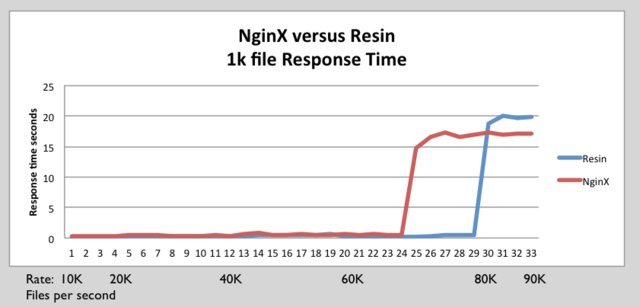

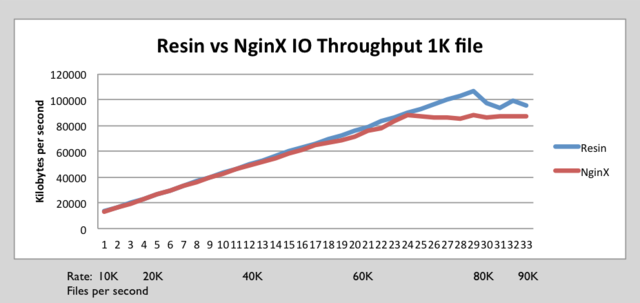

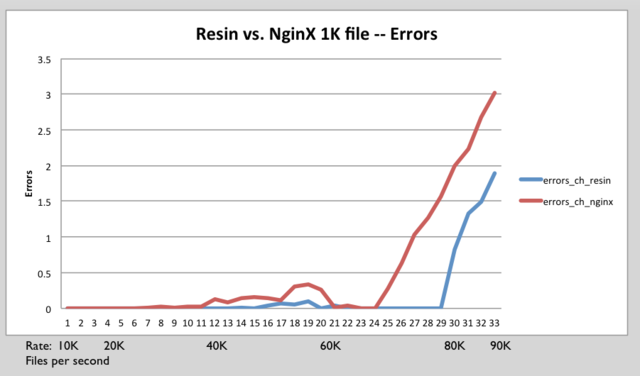

We have recently run some performance benchmarks comparing Resin 4.0.29 versus NginX 1.2.0. These benchmarks show that Resin Pro matches or exceeds NginX's throughput.

Contents |

Benchmark tools

The benchmark tests used the following tools:

- httperf

- AutoBench

httperf

httperf is tool produced by HP for measuring web server performance. The httperf tool supports HTTP/1.1 keepalives and SSL protocols.

AutoBench

Autobench is a tool for automating the process of performing a comparative benchmark test against two a web servers. Autobench uses httperf. Autobench runs httperf against each host. AutoBench increases the number of requests per seconds on each iteration. AutoBench delivers output in a format that can be easily consumed by spreadsheet tools. AutoBench has a mode where it can drive multiple clients against a set of servers to minimize the possibility of testing your client throughput instead of server throughput. The command autobenchd is used to run a daemon on client machines. The autobench_admin command drives many clients to run test at same time by communicating with autobenchd.

Setup Overview

Configuration

The only change that was made was the worker_processes were set to 8 for NginX to improve throughput.

Hardware Software Specifications

Client HW/OS specs:

- i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM.

- Ubuntu 12 / Linux Kernel 3.2.0-26-generic

Server HW specs:

- i7 4 core / 8 HT, 2.8 GHZ, 8Meg Cache, 8 GB RAM.

- Ubuntu 12 / Linux Kernel 3.2.0-26-generic

Test software:

- Autobench 2.1.1

- httperf 0.9.0

Software under test:

- Resin Pro 4.0.29

- nginx 1.2.0

0k test

Command Line Arguments

0k.sh

./admin.sh 300000 2000 20000 1000 0k

admin.sh

autobench_admin --clients xen:4600,lancre:4600 --uri1 /file_$5.html --host1 ch_resin --port1 8080 --uri2 /file_$5.html --host2 ch_nginx --port2 80 --num_conn $1 --num_call 10 --low_rate $2 --high_rate $3 --rate_step $4 --timeout 3 --file out_con$1_start$2_end$3_step$4_$5.tsv

Above is used to setup 300,000 connections at a rate of 20,000 to 200,000 requests per second. Each iteration increases the rate by 10,000 from 20,000 to 200,000.

0k html file file_0k.html

<html> <body> <pre></pre> </body> </html>

0k full Results for 0K test

1K test

Command line

1k.sh

./admin.sh 200000 1000 10000 250 1k

admin.sh

autobench_admin --clients xen.caucho.com:4600,lancre.caucho.com:4600 --uri1 /file_$5.html --host1 ch_resin --port1 8080 --uri2 /file_$5.html --host2 ch_nginx --port2 80 --num_conn $1 --num_call 10 --low_rate $2 --high_rate $3 --rate_step $4 --timeout 3 --file out_con$1_start$2_end$3_step$4_$5.tsv

1k.html

html> <body> <pre> 0 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 1 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 2 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 3 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 4 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 5 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 6 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 7 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 8 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 9 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 0123456789 </pre> </body> </html>